Particle Video

Abstract

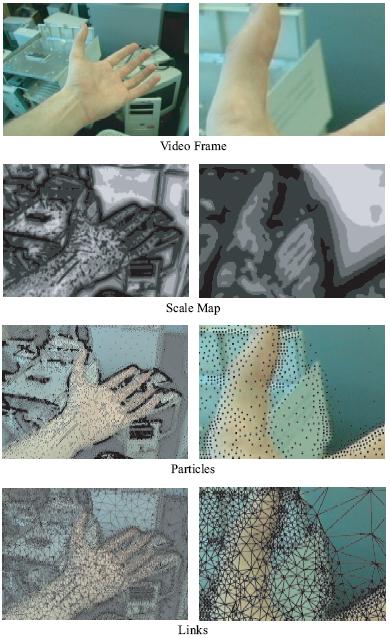

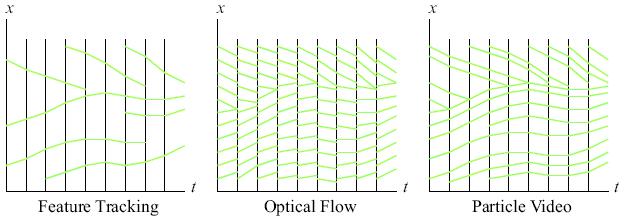

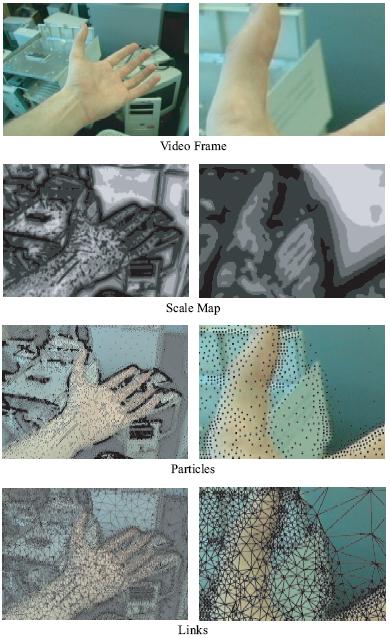

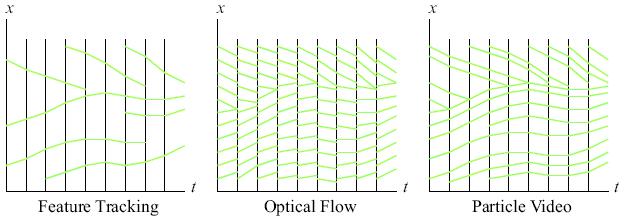

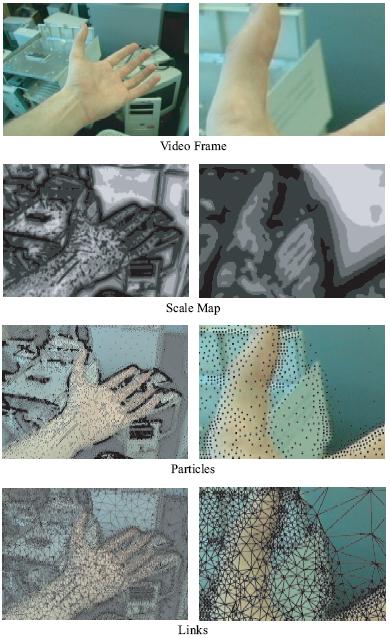

This project presents a new approach to motion estimation in video. We

represent video motion using a set of particles. Each particle is an

image point sample with a long-duration trajectory and other

properties. To optimize these particles, we measure point-based

matching along the particle trajectories and distortion between the

particles. The resulting motion representation is useful for a

variety of applications and cannot be directly obtained using existing

methods such as optical flow or feature tracking. We demonstrate the

algorithm on challenging real-world videos that include complex scene

geometry, multiple types of occlusion, regions with low texture, and

non-rigid deformations.

References

Peter Sand and Seth Teller, Particle Video: Long-Range Motion Estimation using Point Trajectories, IJCV (issue: best papers of CVPR 2006), 2008

Peter Sand and Seth Teller, Particle Video: Long-Range Motion Estimation using Point Trajectories, CVPR, 2006

(CVPR Talk Slides, Videos)

Peter Sand, Long-Range Video Motion Estimation using Point

Trajectories, PhD Thesis, 2006

Code

A simplified particle video implementation can be downloaded here. This is an alpha release. Please let us know if you have any trouble with it.

Data